Few-Shot Design Optimization

by Exploiting Auxiliary Information

Department of Computer Science, Columbia University

Department of Computer Science, Columbia University

Many real-world design problems involve optimizing an expensive black-box function f(x), such as hardware design or drug discovery. Bayesian Optimization has emerged as a sample-efficient framework for this problem. However, the basic setting considered by these methods is simplified compared to real-world experimental setups, where experiments often generate a wealth of useful information. We introduce a new setting where an experiment generates high-dimensional auxiliary information h(x) along with the performance measure f(x); moreover, a history of previously solved tasks from the same task family is available for accelerating optimization. A key challenge of our setting is learning how to represent and utilize h(x) for efficiently solving new optimization tasks beyond the task history. We develop a novel approach for this setting based on a neural model which predicts f(x) for unseen designs given a few-shot context containing observations of h(x). We evaluate our method on two challenging domains, robotic hardware design and neural network hyperparameter tuning, and introduce a novel design problem and large-scale benchmark for the former. On both domains, our method utilizes auxiliary feedback effectively to achieve more accurate few-shot prediction and faster optimization of design tasks, significantly outperforming several methods for multi-task optimization.

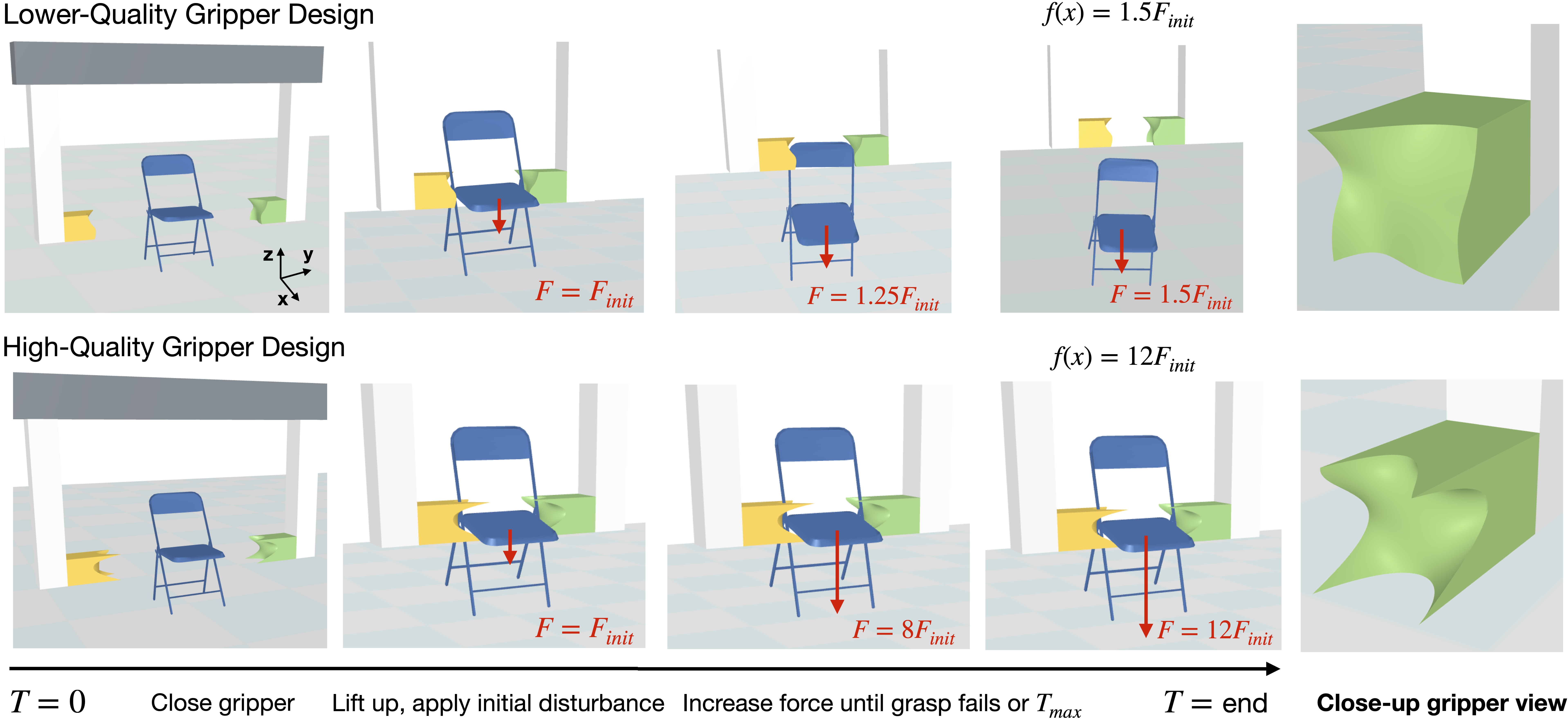

We introduce a new design problem, based on designing robotic grippers to grasp objects as stably as possible, using tactile feedback of the object during each grasp attempt. Our benchmark dataset contains 4.3 million gripper design evaluations across 1000 diverse objects (i.e. 1000 design tasks). The design space is parametrized as a cubic Bézier surface, and the initial height of the gripper is also optimized. Below shows an example of a design task and our simulation evaluating the grasp stability.

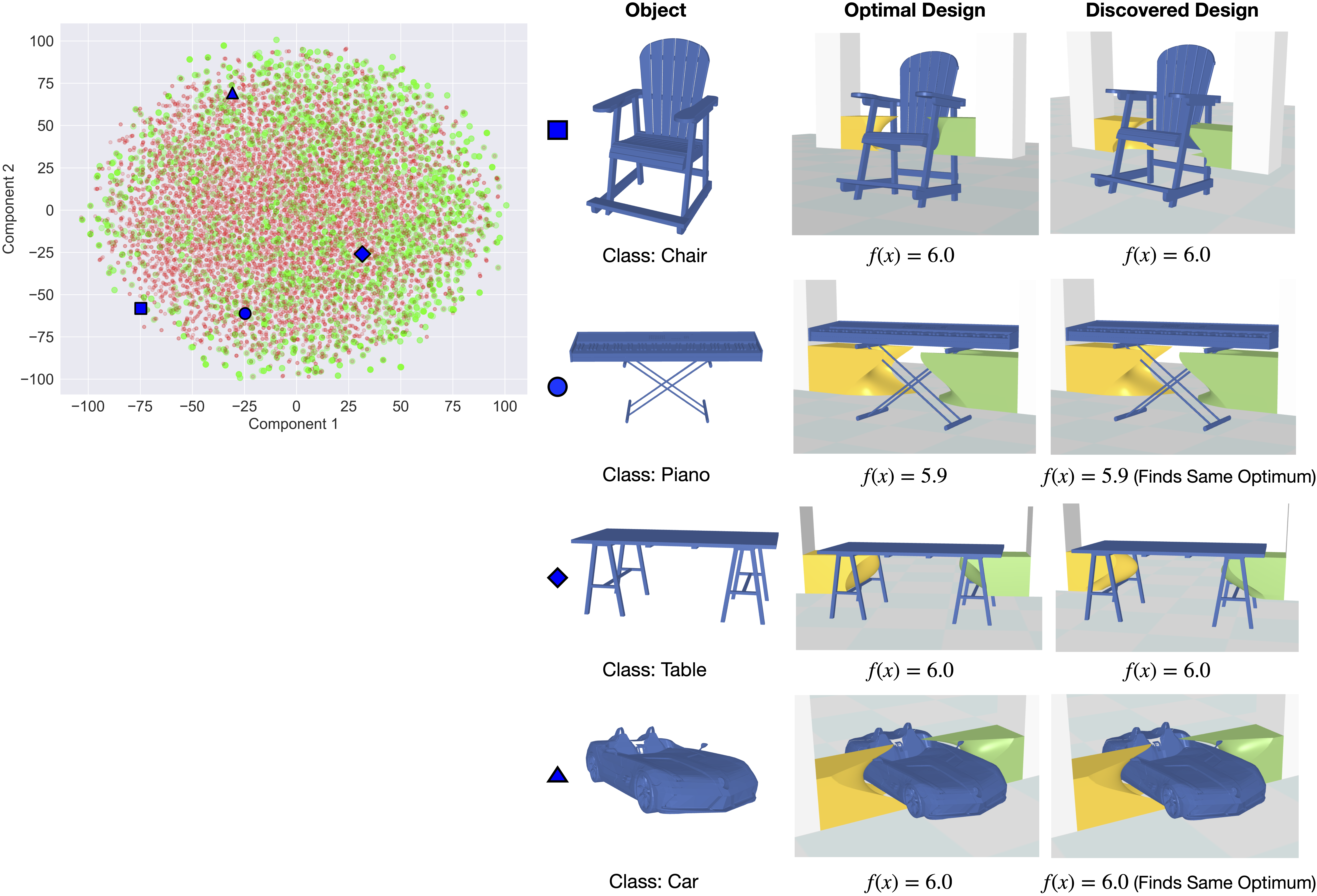

Below shows several examples of gripper design tasks in the test set. The optimal design in the dataset is shown along with the design our model discovers over optimization. Our model discovers sophisticated, creative grasping strategies that maximize grasp stability, such as clasping the seat of a chair to resist any disturbances (first row), clamping thin structures in the object like piano legs (second row), and protruding into gaps in the object (third row). Zoom into each row for best viewing.

@article{mani2026designopt,

title={Few-Shot Design Optimization by Exploiting Auxiliary Information},

author={Mani, Arjun and Vondrick, Carl and Zemel, Richard},

journal={arXiv preprint arXiv:2602.12112},

year={2026}

}